What we will achieve:

- Centralized Terraform State Management: Setting up an AWS S3 Bucket for Shared State Across Team Environments

- Run a web application in AWS VM using terraform

Amazon S3

Store and retrieve any amount of data from anywhere

Amazon S3 (Simple Storage Service) in AWS offers a robust solution for diverse storage needs. Its versatility makes it a cornerstone for various use cases, such as serving as a reliable backup and restore system, hosting static websites, distributing content globally through integration with Amazon CloudFront, and acting as a central data lake for analytics services

We will utilize S3 bucket to maintain terraform state for all team members.

Project source code Github: https://github.com/hasanashik/terraform_aws/tree/main

Repository introduction:

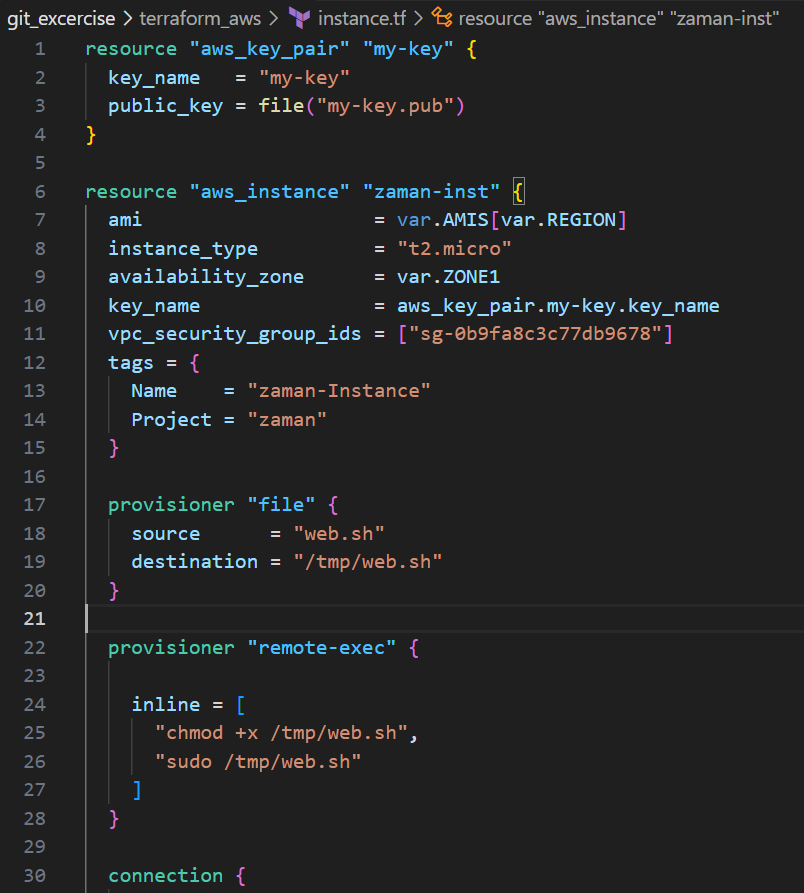

In terraform_aws directory we have 4 terraform files:

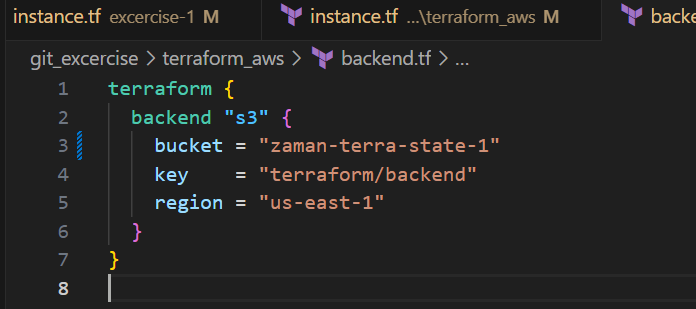

- backend.tf: S3 bucket terraform state maintanance

- instance.tf: Defines required resources of AWS

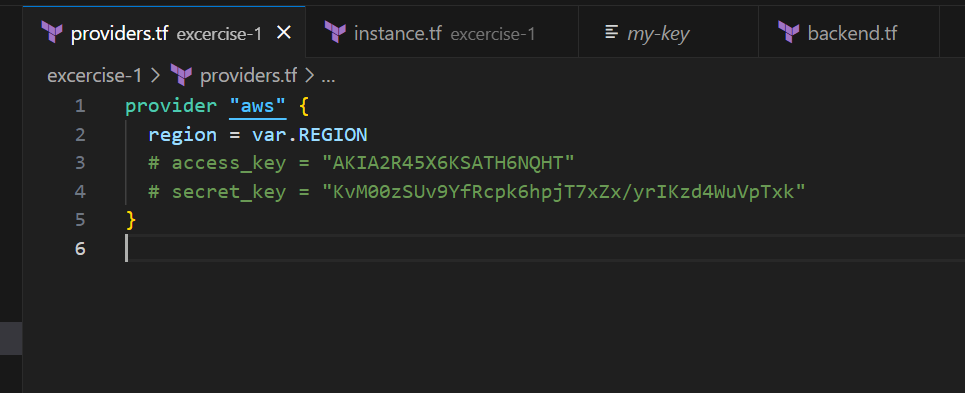

- providers.tf: Defines cloud provider

- vars.tf: Defines variables used in other terraform files.

Generating an RSA key with default settings:

We have to create a SSH key pair which will be used at time of creating resources in AWS. To do so we need to run: ssh-keygen

This will create public and private key files.

Part 1 Steps:

- Create S3 bucket and directory in bucket

- Write backend.tf

- Terraform apply

Creating S3 bucket and directory in bucket:

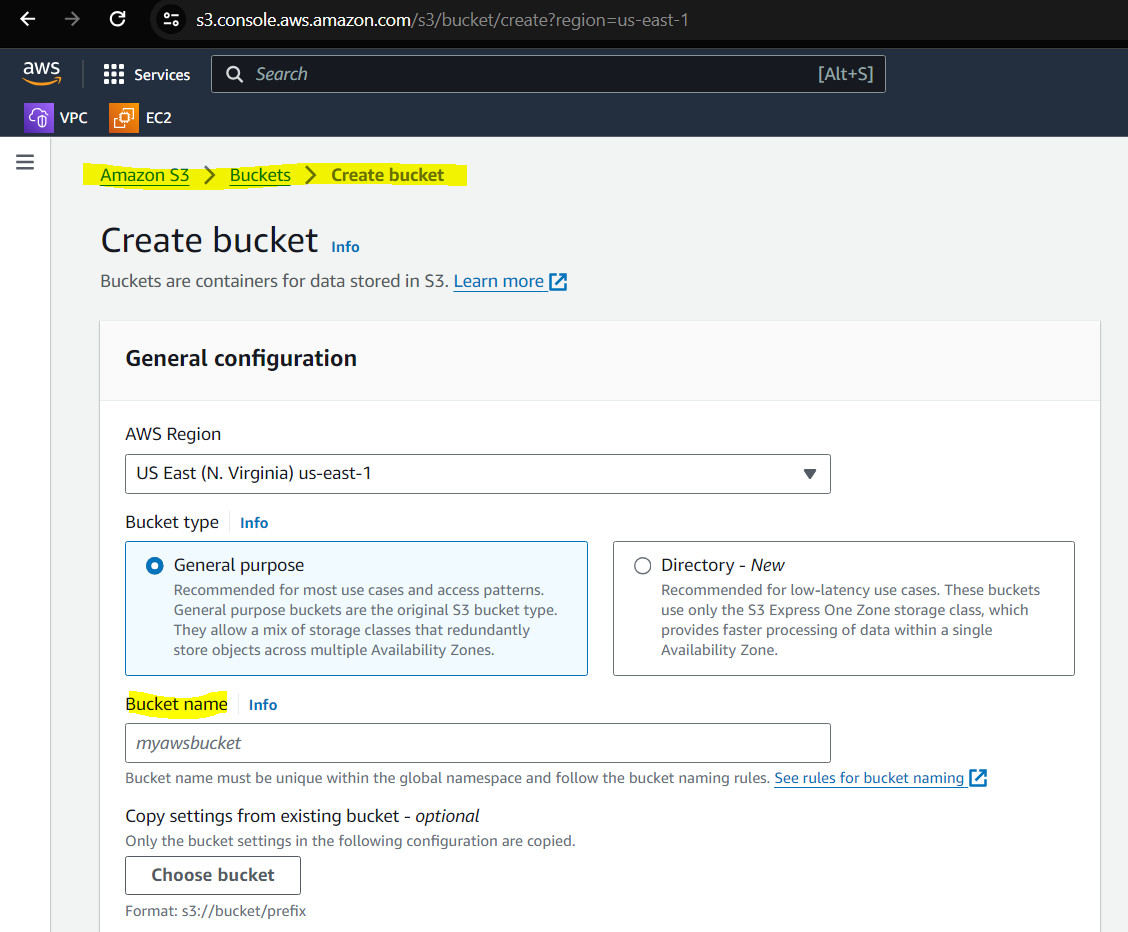

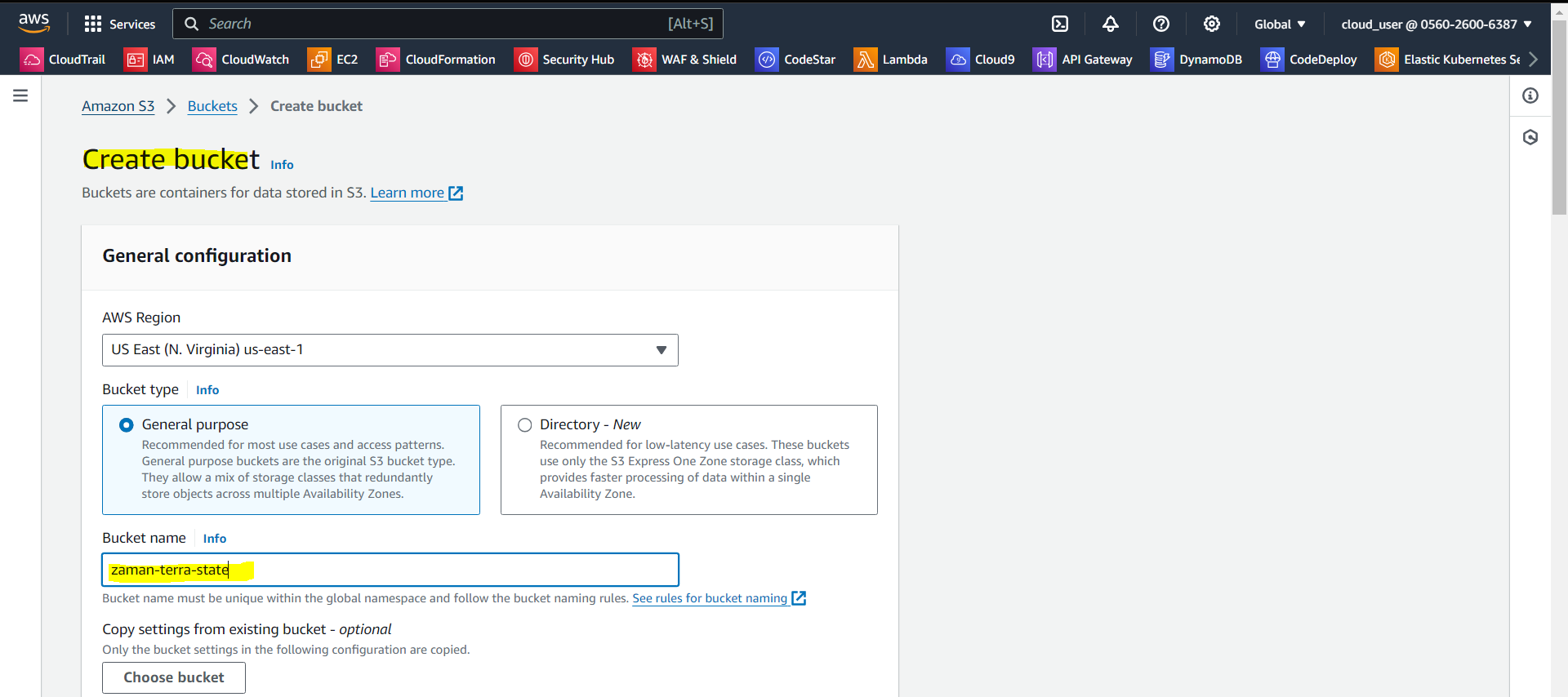

Go to: Amazon S3 > Buckets > Create bucket

Give a bucket name and click create bucket.

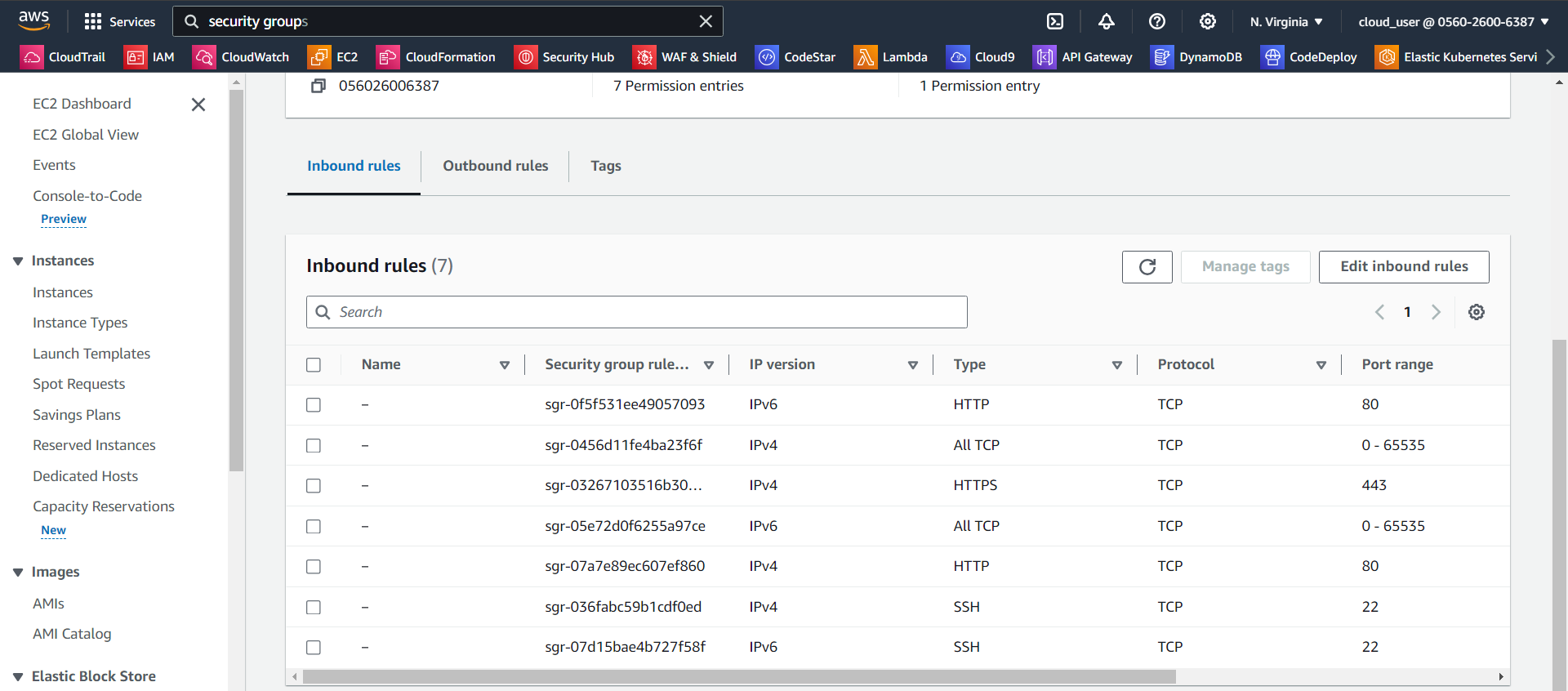

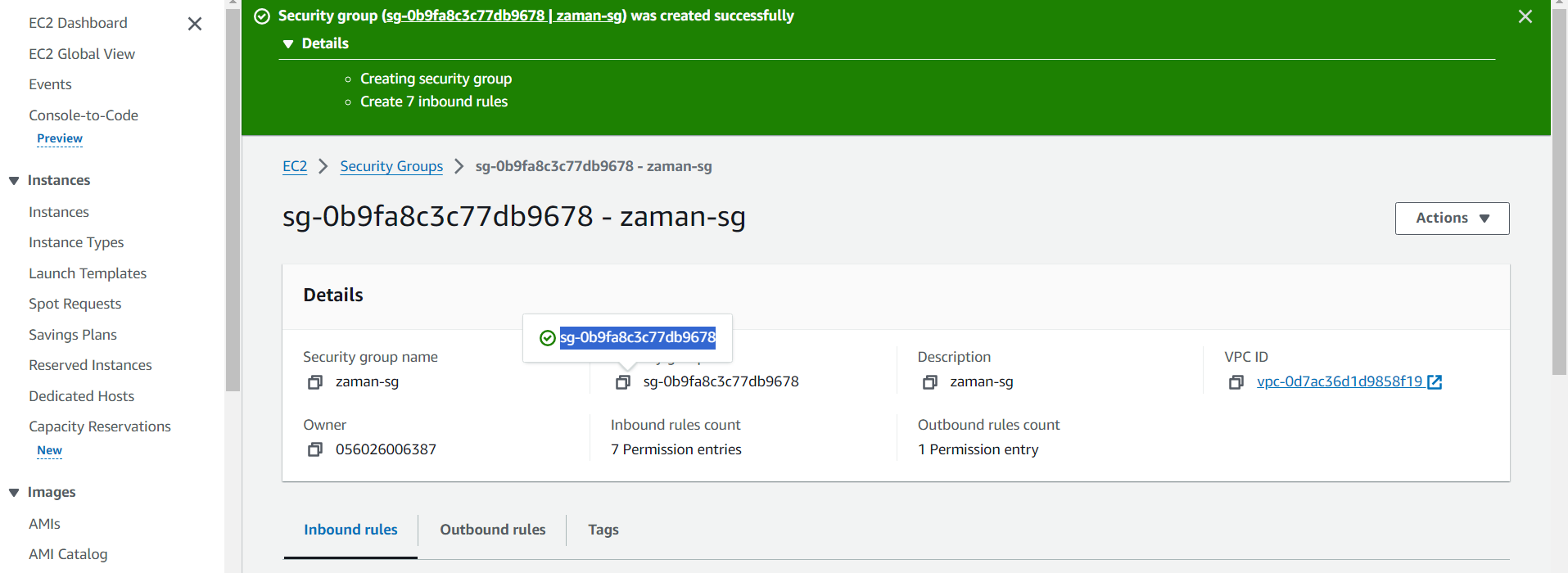

Create Security group by adding inbound rules. For test purpose we are allowing all HTTP, TCP connections for IPv4 and IPv6:

Copy security gruoup ID to instance.tf file.

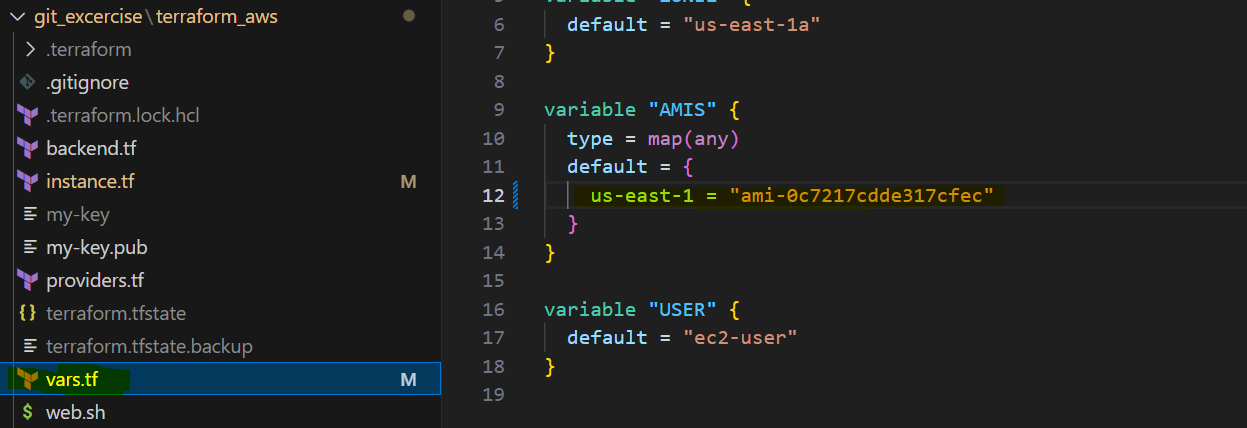

Update AMI in var.tf as per your zone available AMI. Here we are using Amazon Machine Image (AMI)

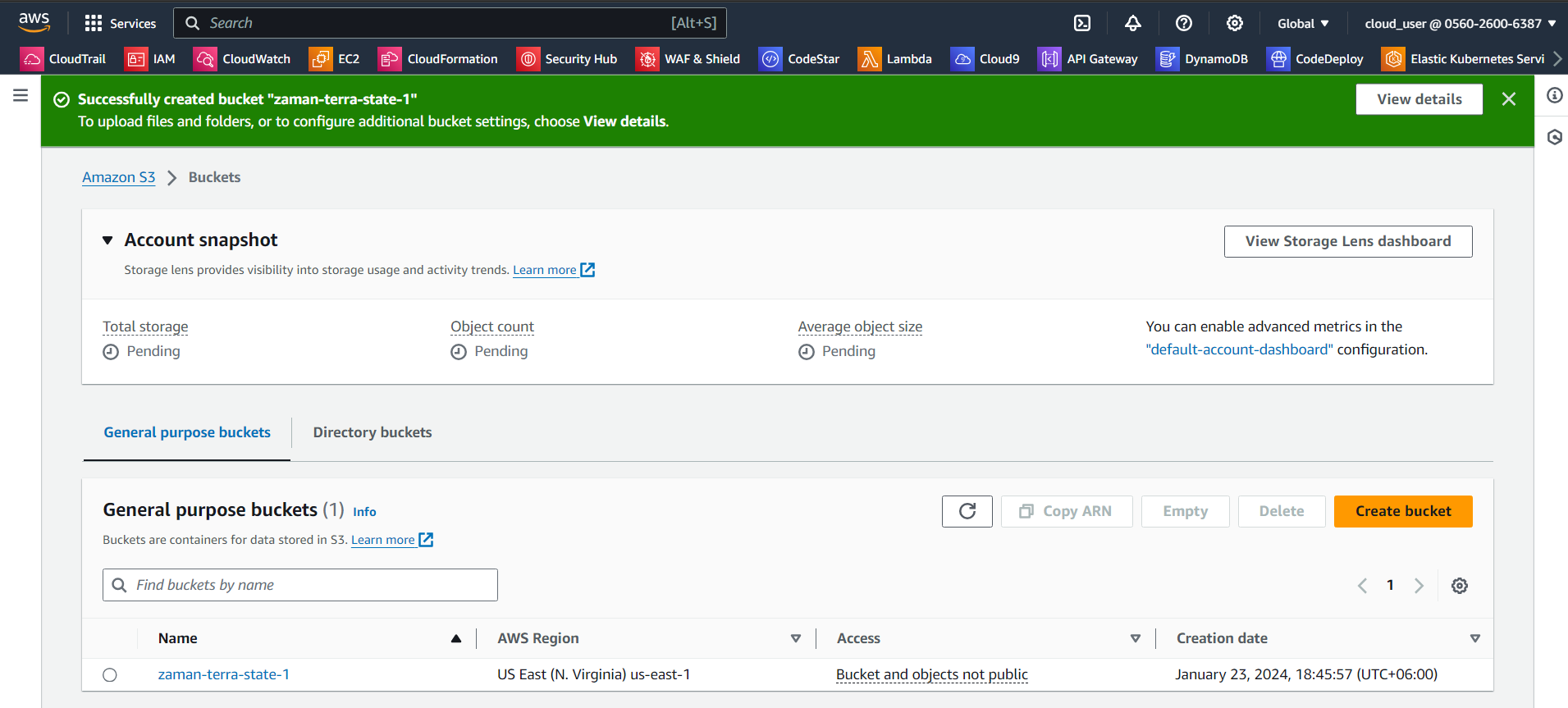

Now create a S3 bucket:

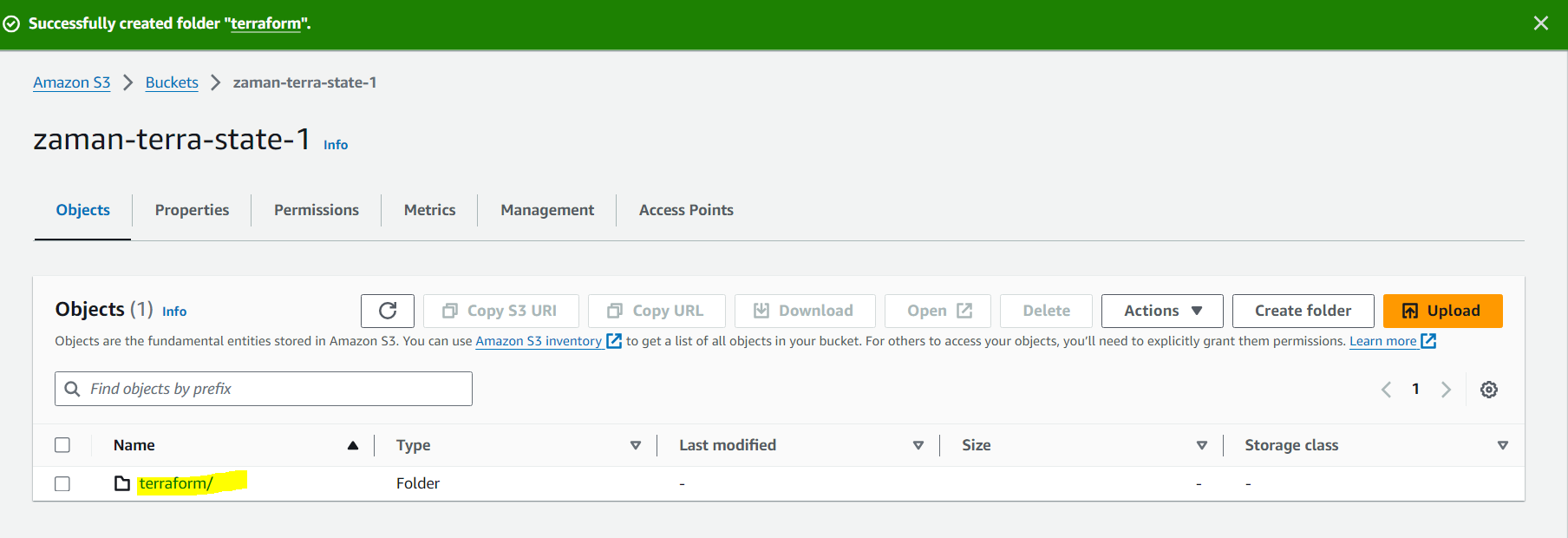

Create a repository inside bucket:

Update backend.tf values.

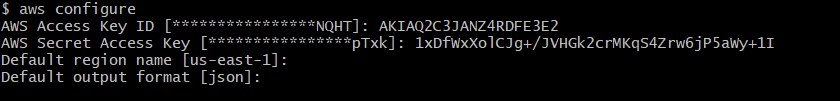

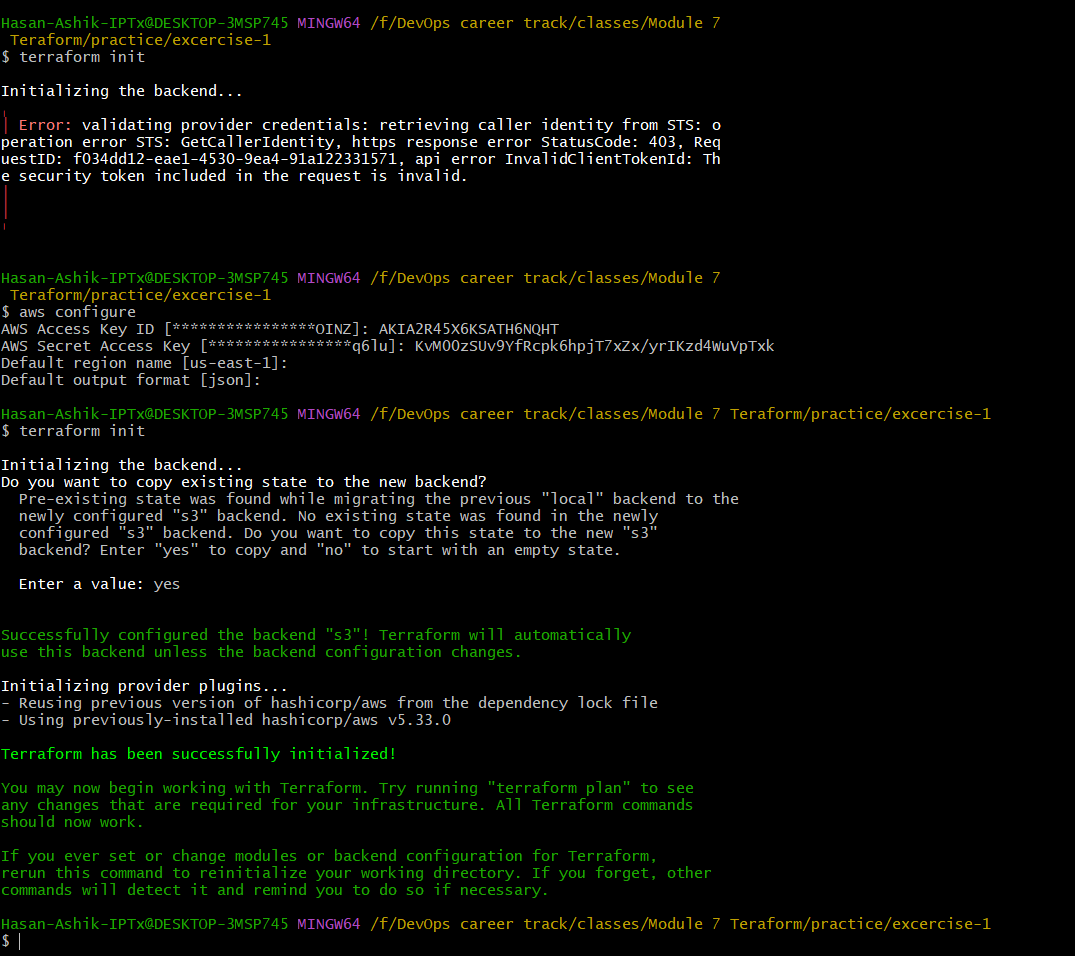

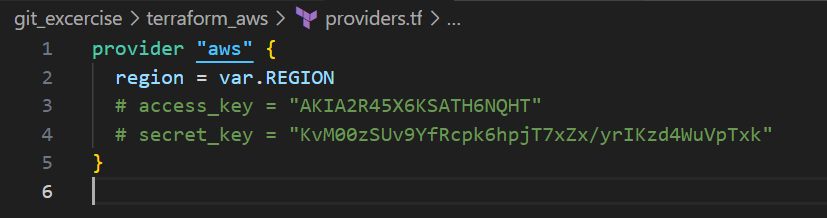

Update providers.tf file as per your access key or you can do aws configure in cli.

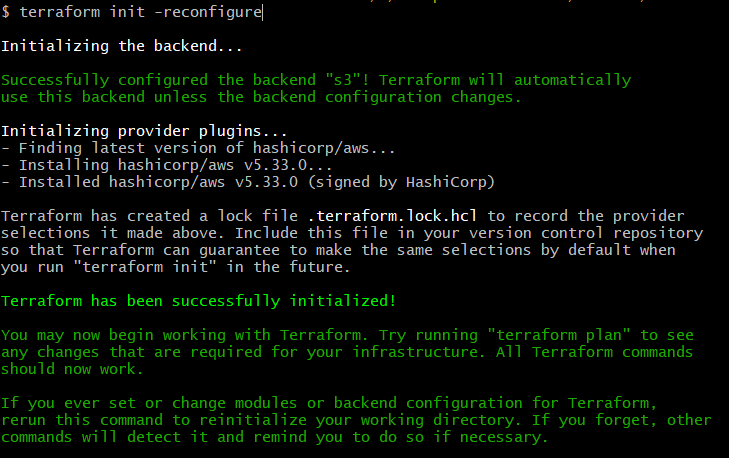

Now let us initialize terraform to create state file in the folder of S3 bucket.

Run terraform init or terraform init -reconfigure

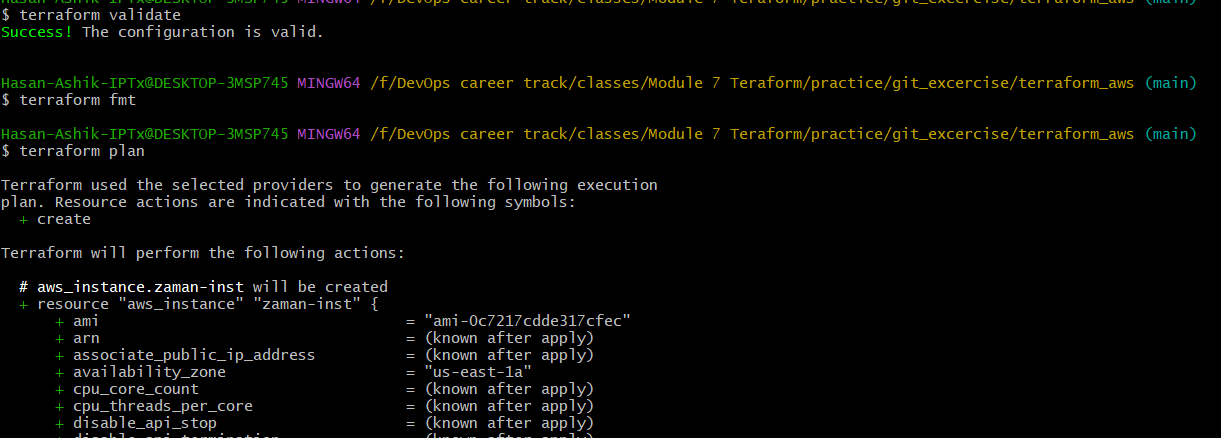

Do terraform validate, fmt and plan

And finaly terraform apply.

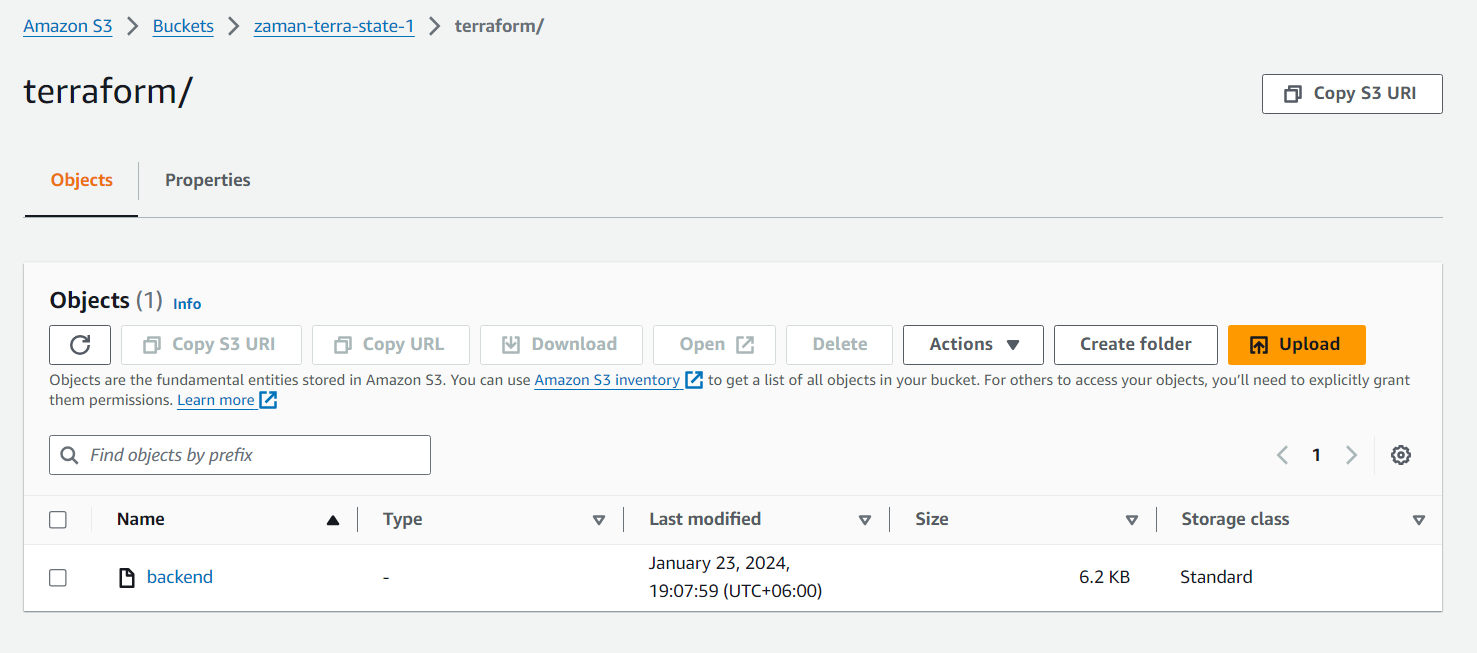

In the bucket now we can see that the state is updated:

Part 2: Run a website inside AWS EC2 VM using terraform

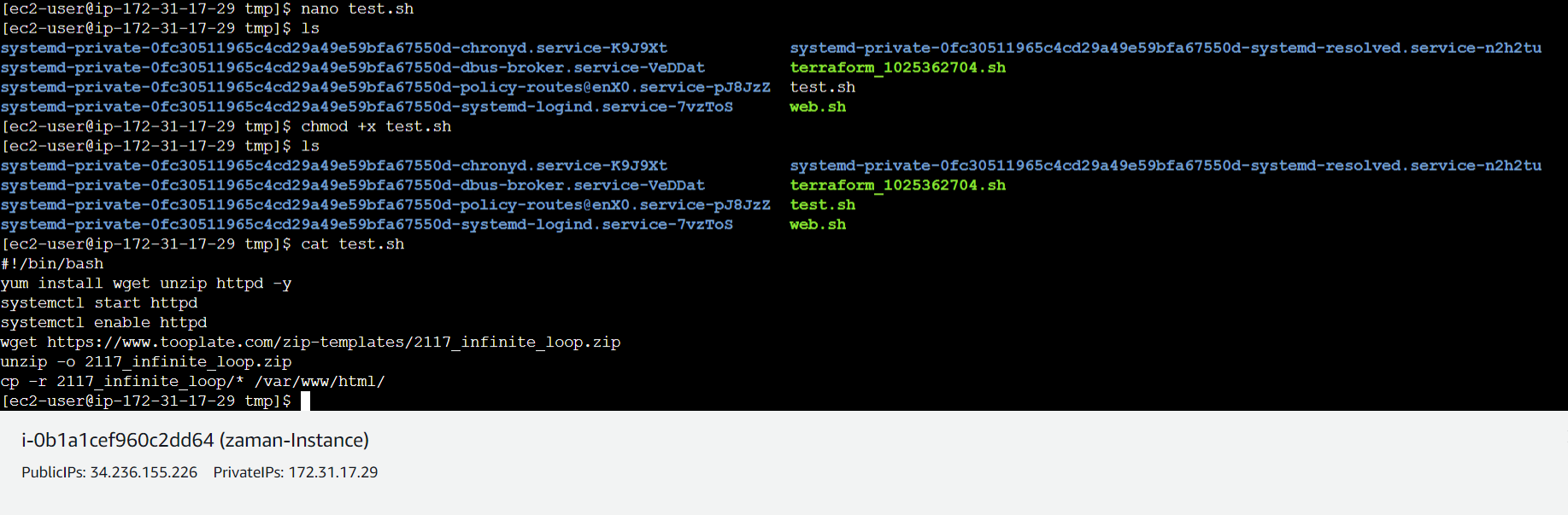

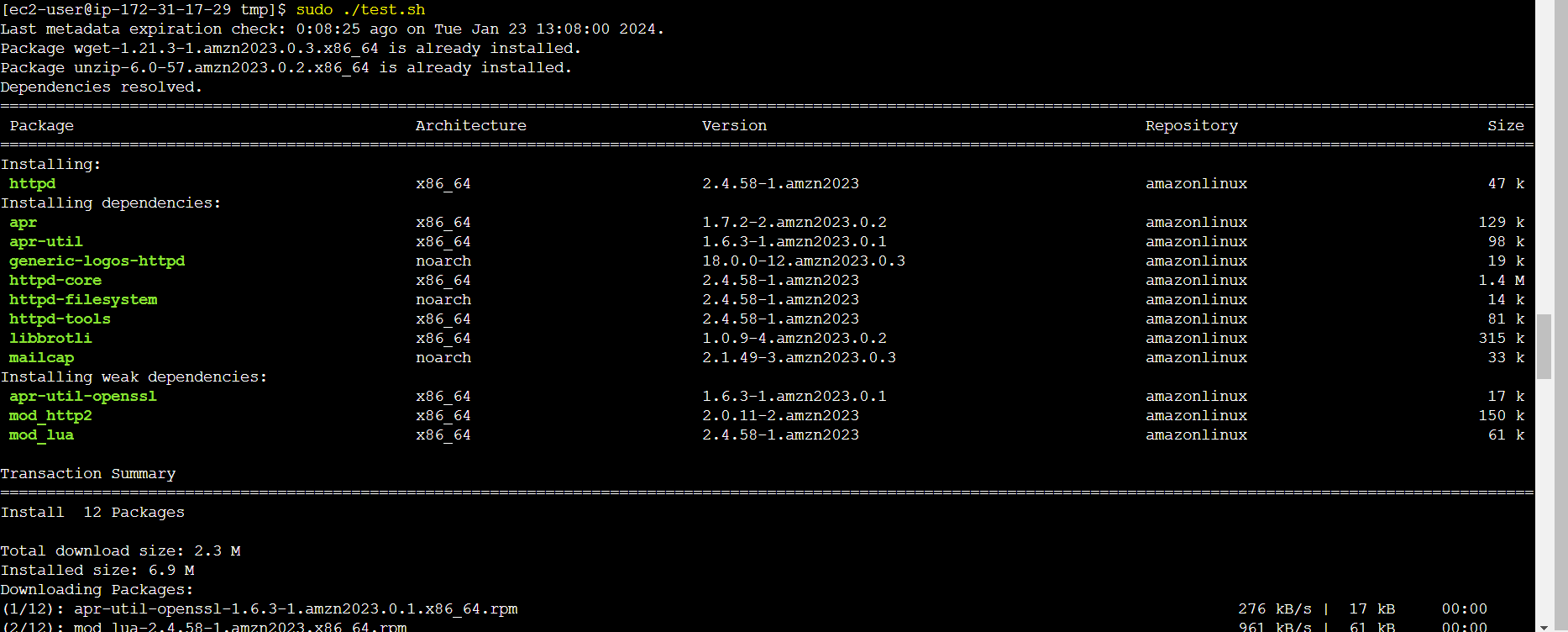

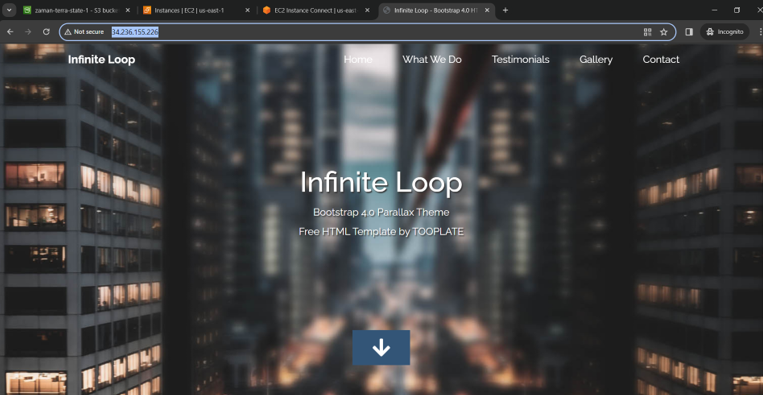

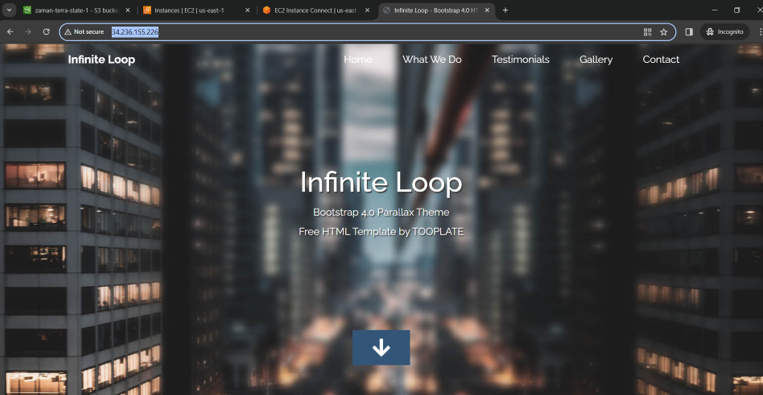

Our part 1 terraform scripts already pushed the required bash script to run web application inside the VM. Now we should have our web application running at public ip of VM.

In our case we needed to manually run the web.sh file after copying its content to a new manual script file.

And finally got our application at VM public ip address.

Let’s now discuss the terraform scripts:

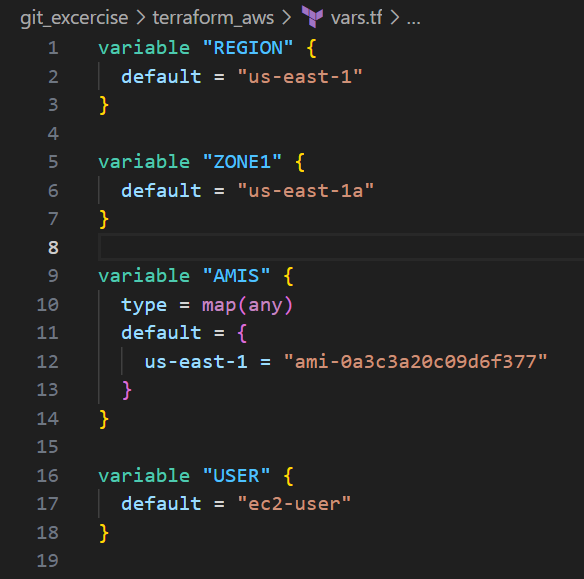

Vars.tf: It defines variables that can be used provide a flexible way to manage configuration parameters for infrastructure resources and can be referenced. The REGION variable specifies the default AWS region as “us-east-1.” The ZONE1 variable sets the default availability zone to “us-east-1a.” The AMIS variable, of type map(any), holds Amazon Machine Image (AMI) IDs, with a default value assigned for the “us-east-1” region. Lastly, the USER variable defines the default username for connecting to EC2 instances as “ec2-user.”

Providers.tf: Defines an AWS provider block. We have commented out access and secret key because they are configured using aws configure command.

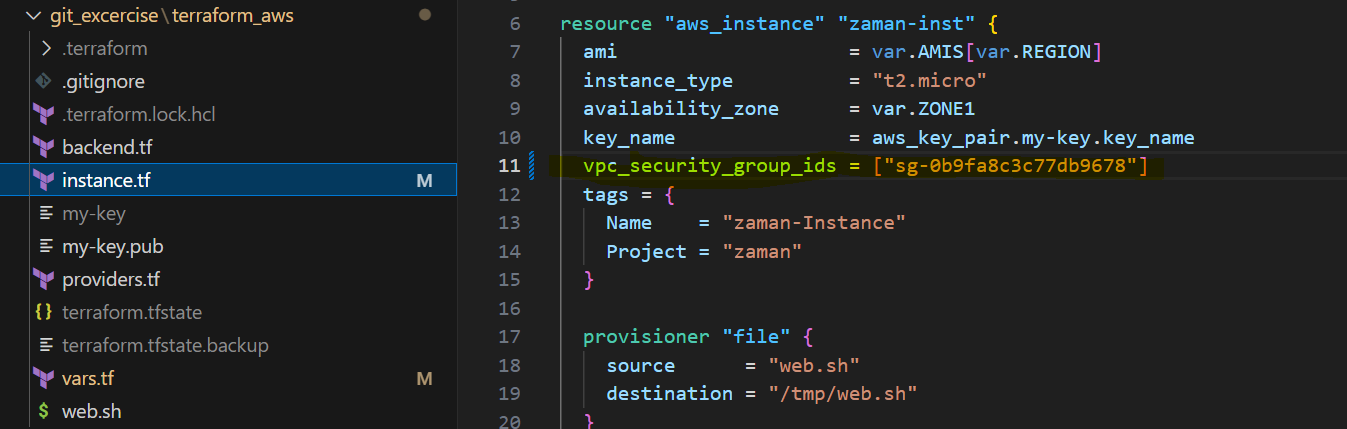

And finally, instance.tf: This file creates AWS key-pair and EC2 instance.

The first resource, aws_key_pair, creates an SSH key pair named “my-key” using the public key from the file “my-key.pub.” The second resource, aws_instance, launches an EC2 instance named “zaman-inst” in the specified AWS region. It uses the AMI ID from the AMIS variable based on the chosen region, with instance type “t2.micro” and availability zone from the ZONE1 variable. The instance is associated with a security group and tagged with a name and project. Additionally, the script provisions the instance by copying a local script (“web.sh”) to “/tmp/web.sh” on the remote instance and then executes it using remote-exec provisioners. The connection block specifies the SSH user, private key file, and host information for connecting to the instance.

0 Comments